Part 2 of our 5 part series “The 3 Body Problem” Previous posts: Overview & Part 1

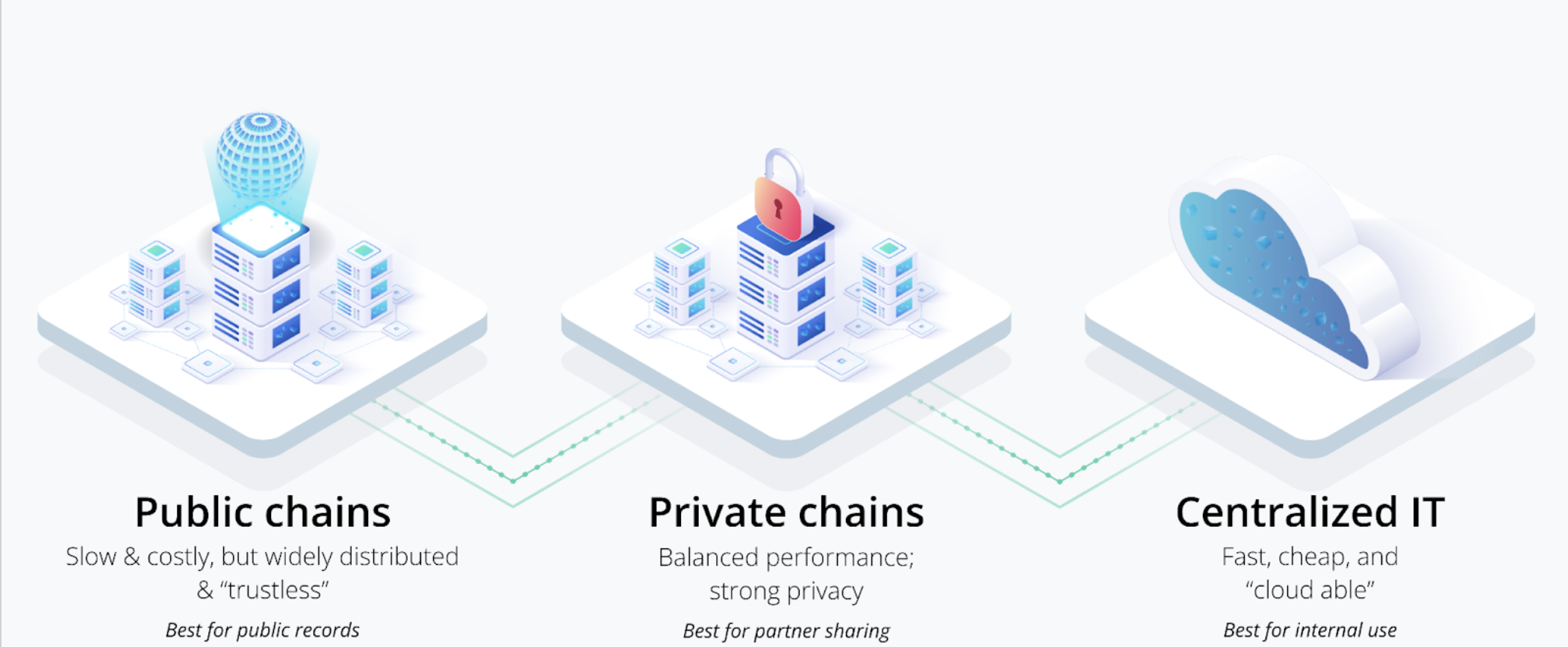

In our previous Three Body Problem post we looked at the continuum on which these technologies sit. In this post we’ll dive deeper into the relative strengths (and weaknesses) of each, to help us understand how to compose them to best effect. Let’s get started…

Centralized Solutions – Classic IT Always Wins on Bandwidth and Cost

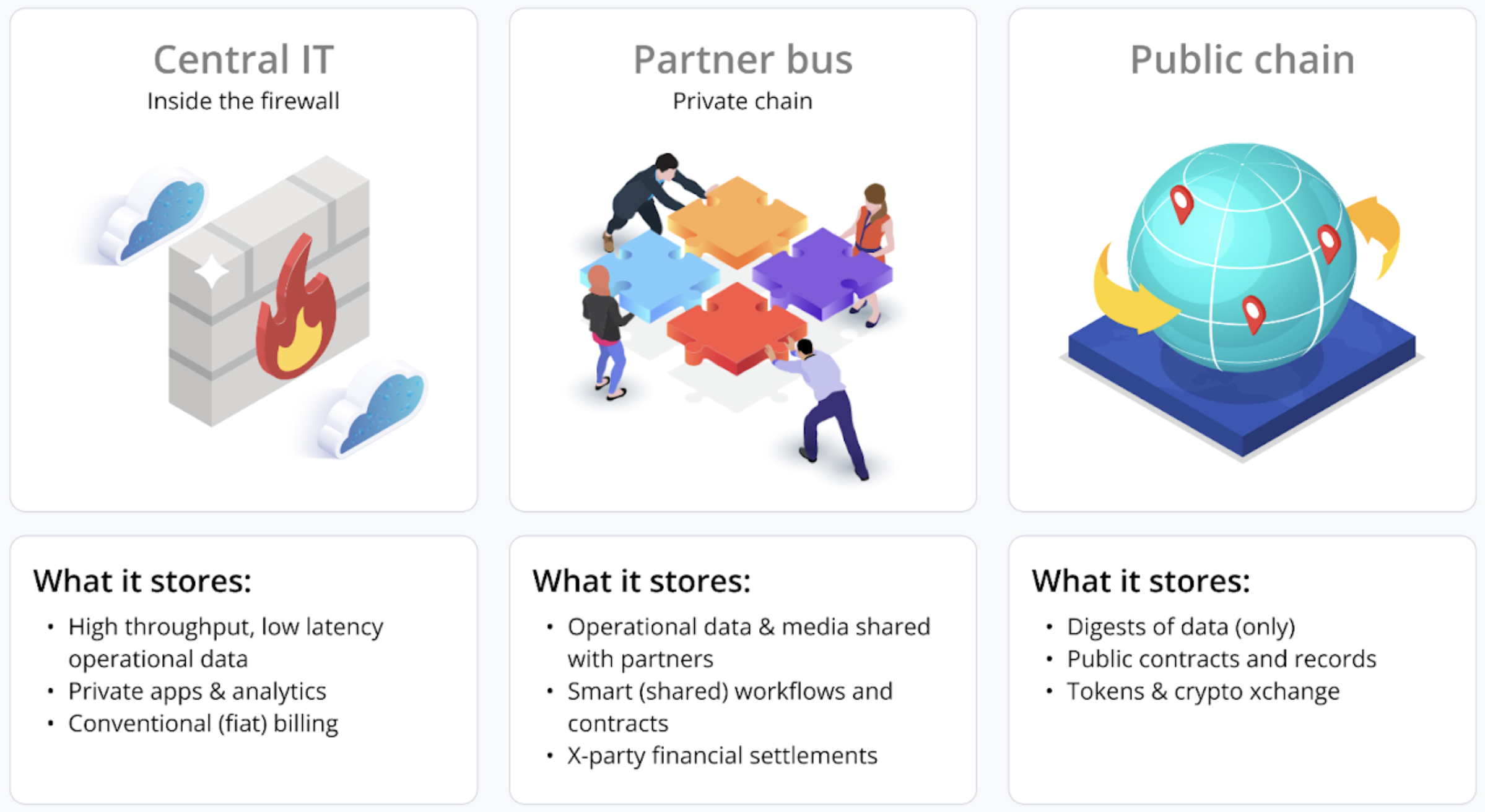

Centralized solutions include virtually all existing applications and all use of existing on-premise and cloud services.

These solutions span the classic range of IT infrastructure: Operational databases, data warehouses for analytics, compute, file storage, and more. Cloud-based centralized services and application designs will continue to be the majority of your company’s IT portfolio for durable, structural reasons:

- Centralized solutions will always offer the highest throughput and lowest latency and cost structure. This is an unsurprising conclusion, because the compute, storage, and network requirements and costs are obviously minimized when an application or IT solution is “talking to itself”: With no need to replicate, achieve agreement, or transmit code or data to other geographies or parties, latency and cost are minimized, throughput and bandwidth are maximized, and costs are tightly enveloped to just what is required for the underlying storage and compute needed to perform the actual function.

- Centralized solutions have trivial solutions for many privacy, security, and governance controls: The firewall. A large percentage of data – and the vast majority of systems and services – within a company are never exposed to the outside world. Without intending to minimize the challenges of securing modern IT infrastructure, it is still easy to see that keeping wholesale systems inside the firewall is far easier than operating public or shared infrastructure and having to make row-by-row and API-call-by-API-call decisions regarding what to expose or hide. Centralized IT will always offer easier ways to “keep private things private” than trying to make data public while also keeping it hidden from prying eyes, as would be required when using a public chain to store data. Complex cryptography approaches, such as zkSNARKS or homomorphic encryption, will never fully replace straightforward firewalls because they are inherently more costly and complex on a per-transaction or per-application basis.

- Centralized solutions offer the fastest innovation and development speed. Again, this is obvious after a moment of reflection: If a company needs to seek permission from others – especially a worldwide consortium of protocol developers, miners, and node operators with wildly disparate needs and economic goals – to make a change, change is inherently going to be slow, incremental, and fraught with challenges. When the only person a department or company needs to consult with is themselves, adopting a newly launched cloud service, integrating with another system, or adjusting an authentication solution will be as fast and efficient as possible.

- Centralized solutions are intermediate in their environmental impacts.** Because they typically serve only their owner and need to scale to maximum potential usage rates, centralized systems often suffer from low utilization, with 10-15% (i.e., 85-90% waste) being typical for enterprises. This makes them worse than cloud-based private chains (see below) but better than legacy public chains based on environmentally destructive Proof of Work algorithms.

Private Chains – Best for Partner Data Sharing and the Environment

Private/permissioned chains occupy an important space between centralized services and fully public chains. Because they support a controlled population of business partners – such as the part suppliers, logistics providers, and manufacturing partners in a supply chain relationship – they make data sharing much easier than for centralized IT, where creating a “single source of truth” from the raw building blocks of cloud or on-prem infrastructure is a heavy lift.

Precisely because this population is limited (and because private chains specialize in asymmetric sharing patterns), the challenges of trying to place “private information in a public space” that would occur with a public cloud doesn’t need to be solved, eliminating the requirement to adopt complex approaches such as zkSNARKs.

Let’s take a look at where private chains excel:

- Private chains offer a single source of truth among business partners for operational data. Because they can replicate large amounts of data with high throughput and low latency (unlike public chains), private chains make it easier to share operational data in real time. Smart contracts make it easy for partners to also share common policies, workflows, and data integrity constraints, none of which need be exposed to the public.

- Private chains offer the most sophisticated data privacy, data protection, and data governance of all three options. Because private chains are designed from the ground up with selective information sharing as a key feature, they generally have far more sophisticated (and well tested) data controls than “roll your own” centralized solutions built in-house or public chains, with their one-size-fits-all symmetric key encryption. Private chains delivered as SaaS solutions get all the conventional SaaS economic benefits of amortizing the costs of building, securing, and maintaining complex governance and data access controls over many customers, a benefit unavailable to one-off centralized solutions.

- Private chains offer balanced innovation speed with rapid development time. It may seem odd at first blush, but private chains usually offer the fastest time to market: They require less manual buildout than a built-from-scratch centralized application, while offering more built-in capabilities (especially when offered in a SaaS deployment model) than a public chain, making them the closest to a working solution out of the box. Private chain users can innovate as fast as their joint decision making allows, without the overhead of buy-in from miners, node operators, and open source developers who could be anywhere on the planet, and who may not align with the business partnership’s needs particularly well.

- Private chains can offer the lowest infrastructure costs and environment footprint of all three categories. It may seem odd that the “intermediate” solution is better than either of the extremes, but private chains have the unique advantage that they can be delivered as SaaS offerings. This enables them to offer fast innovation, minimal operational burdens (or infrastructure costs), and to take advantage of multi-tenanted approaches that offer significant economies of scale to users at high degrees of utilization. By contrast, centralized IT teams can rarely achieve the breadth and variety of use to achieve high utilization. Public chains, especially those that, like Ethereum, rely on Proof of Work to mint transactions, can have devastatingly large environmental impacts, and all public chains replicate data everywhere, regardless of consumption or access patterns, which can create a much higher carbon footprint than the highly targeted replication of a private chain.

Public Chains – Best for Archiving Truly Public Data

Not all applications or solutions require placing data into a planet-spanning public record, but when they do, public chains offer the broadest degree of sharing, and require the least amount of trust, to achieve this outcome.

- Public chains are best for sharing public and permanent information. With broad replication across geopolitical boundaries, nation states, cloud service providers, and a mix of both corporate and individual ownership, public chains such as Ethereum effectively create a public and permanent (irreversible and irrefutable) archive, which is why they’re used for cryptocurrencies. Everywhere they’re stored, these facts are held in the same order, so it’s also possible to establish cause and effect (i.e., whether one fact came before or after another fact), making it possible to model and audit changes in ownership, such as transfers of value or the ownership of objects, both real and virtual.

- This planet-wide dispersal of information comes with both literal and figurative costs. Ethereum, the current “gold standard” in public chains that support data and smart contracts, has transaction rates that are 7-8 orders of magnitude lower than similar centralized IT transactions while latencies can be 7 orders of magnitude higher, placing the price/performance ratio at up to 15 orders of magnitude worse than a typical cloud database such as Amazon DynamoDB. Storage costs for IPFS (especially when coupled to Ethereum to store hashes of files), are also orders of magnitude higher than public cloud storage, particularly when similar levels of durability and availability are required, making this a good solution only when the broadest degree of sharing is mandated by an application’s needs.

- Public chains can offer sophisticated on-chain (“token”) currency mechanisms. Though not intrinsically required, most public chains also feature one or more inbuilt currency mechanisms, enabling them to act as a store, transfer, and (at least in some cases) exchange of value through inter-chain / inter-token swap mechanisms. This can facilitate building cryptocurrency-based applications, though it typically does not provide useful leverage when integrating with a conventional (“fiat”) payment or billing system, such as Stripe or Plaid.

- Public chains innovate slowly and offer minimal integration solutions. Integration with cloud services is minimal to nonexistent because, by design and intent, public chains eschew any form of deep integration with the public cloud. Changes to these systems, once they are in production, are generally slow, as open source developers, miners, and operators all have to collectively agree to migrate a complex arrangement of on-chain incentives while keeping the codebase secure, available, and backwards compatible. This complex interplay of economic and technological incentive structures makes public chains slower to innovate and migrate than private ones and far slower than a centralized IT can adapt and evolve.

- Support for heterogeneous data types is limited and often complex to achieve, often requiring stitching multiple “on” and “off” chain solutions together to represent common business artifacts, such as files. Also, because they must service essentially any data model from any customer anywhere in the world, public chains are “typeless”, offering only key/value stores that require adopters to erect data models on top them, akin to creating a complex ORM abstraction on top of an underlying database (but one with limited query and update functionality).

- Public chains vary in their environmental footprint, but the “gold standard” (Ethereum) currently has a very poor environmental record. Newer “Proof of Stake” approaches improve on the cost of minting transactions, but all public chains are structurally required to spend a higher percentage of their compute (and thus of their carbon footprint) achieving Byzantine resistance and worldwide consensus than centralized or private chain approaches. They also are required to replicate data everywhere, regardless of actual need. These multiple forms of overhead make them permanently more compute (and carbon) intensive on a per-transaction basis.

Due to the limitations described above, most IT solutions that do employ public chains will use some form of digest (Merkle Tree) approach to store only a synopsis of their data, rather than treating the public chain as a real-time operational data store, allowing them to amortize the cost, environmental impact, and latency of public chain overhead by storing many transactions together in a single, composite “write”.

Whew, that was a lot of analysis. In the next post we’ll look at a practical example: how to build an image licensing business, first using a conventional (centralized) approach, then as an NFT.